FAIRshake

Table of Contents

Introduction

The FAIR data principles -- findability, accessibility, interoperability, and resuability -- were first proposed by Wilkinson et al. (Scientific Data, 2016) to set guidelines for improving data reuse infrastructure in academia. In other words, the FAIR guidelines aim to facilitate continuous reuse of digital objects or re-analysis of data, improve automated knowledge integration, and simplify access to various tools and resources.

Within the CFDE, the FAIR principles are a critical component of our goal to meaningfully synthesize information across Common Fund DCCs to maximize knowledge extraction and promote novel hypothesis generation. To learn more about how DCCs can improve the FAIRness of their digital objects, please see the example code and datasets available in the NIH-CFDE FAIR Cookbook.

Resources

General Steps

The steps below are adapted from the FAIRshake User Guide V2 and outline the basics of using FAIRshake, including creating a new project, registering digital objects and rubrics within the project, and running a FAIR assessment.

Starting Projects

-

Navigate to the FAIRshake website and create a user account if you do not already have one.

-

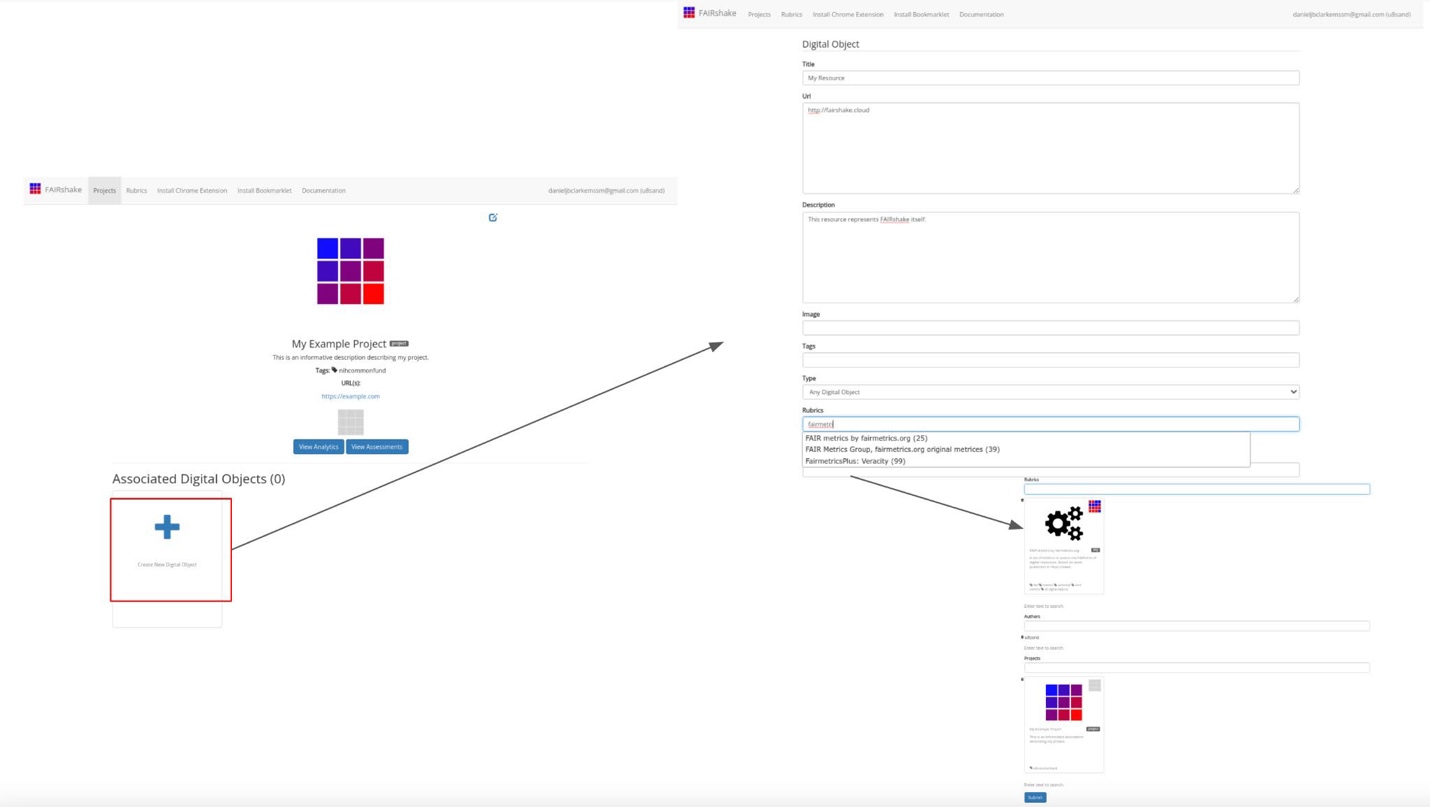

Select the Projects page from the menu at the top of the page, then click on the Create New Project card in the bottom right corner. A project can be used to house a collection of related digital objects that are each associated with at least one FAIR rubric.

Registering Digital Objects

-

Navigate to an existing project or the project you just created, and fill in metadata for a digital object you would like to evaluate. Digital objects may be software, datasets, APIs, or workflows, among others.

-

The Rubrics field will automatically present a list of potential rubrics that are appropriate for your digital object. If you would like to create your own rubric, see the following section on Associating Rubrics.

-

Submitting the form will take you to a new page for your now-registered digital object, which displays its metadata and associated projects and rubrics.

Associating Rubrics

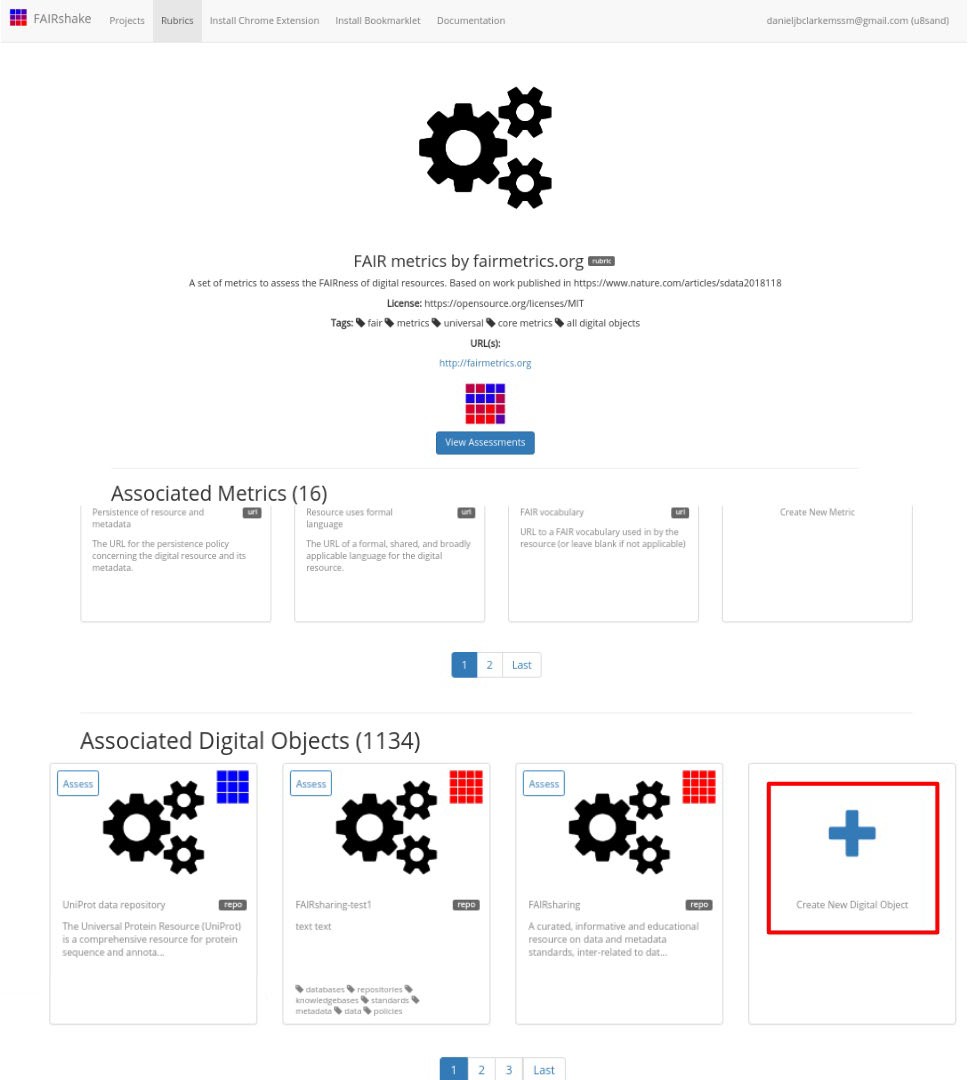

- To create a new rubric for evaluating your digital object, navigate to the Rubrics page via the menu bar, and select the Create New Rubric card in the bottom right.

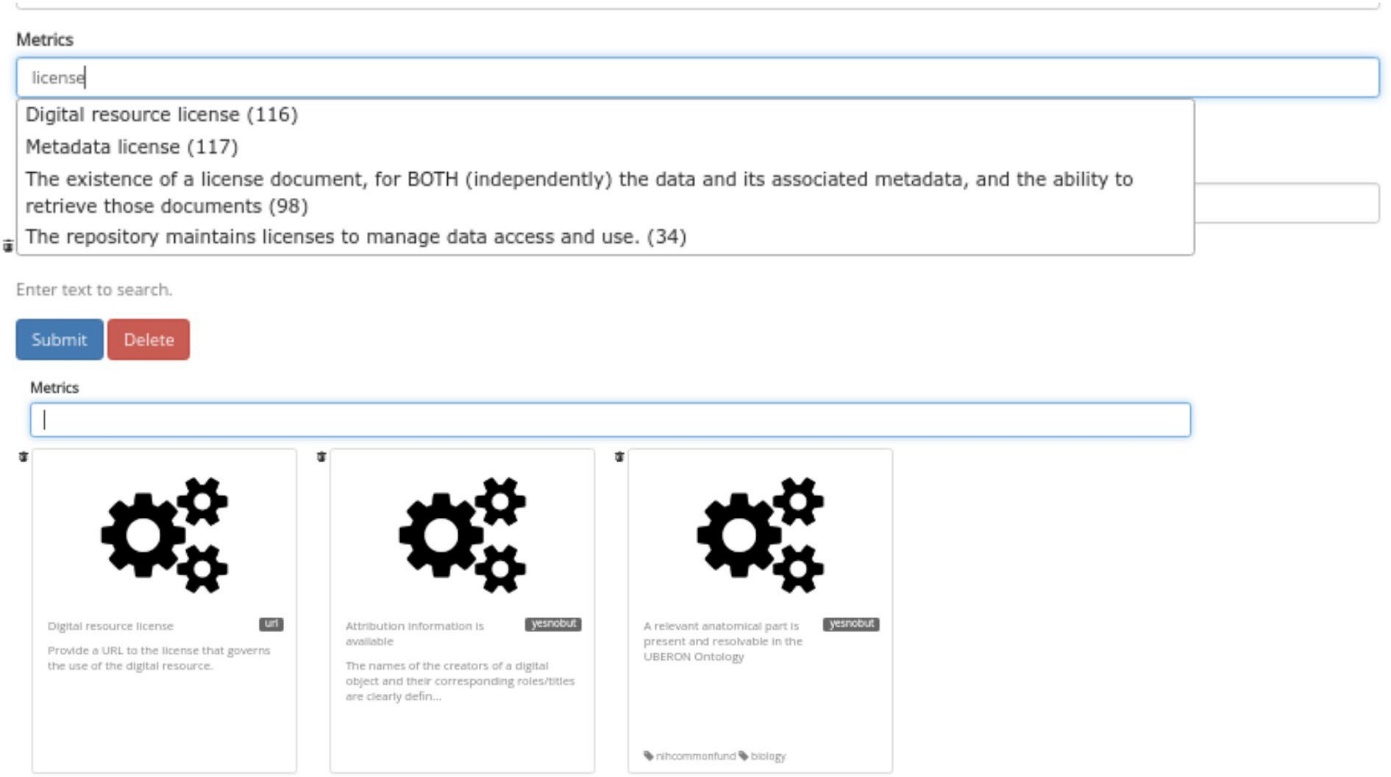

- Use the autocomplete Metrics field to identify existing metrics, or specific assessment questions, that may be relevant for your rubric. Select as many metrics as you would like; these will be included in your FAIR assessment for the digital objects associated with your rubric.

- A pre-existing rubric with list of universal FAIR metrics developed by Wilkinson et al. (Scientific Data, 2018) can be found here.

- A pre-existing rubric with list of universal FAIR metrics developed by Wilkinson et al. (Scientific Data, 2018) can be found here.

- Submit the form to finish building a rubric. Note that it is not necessary to meticulously cover all FAIR principles in your rubric, as long as they are broadly represented, and the metrics you choose can reasonably determine the FAIRness of your object within the broader community.

Performing Assessments

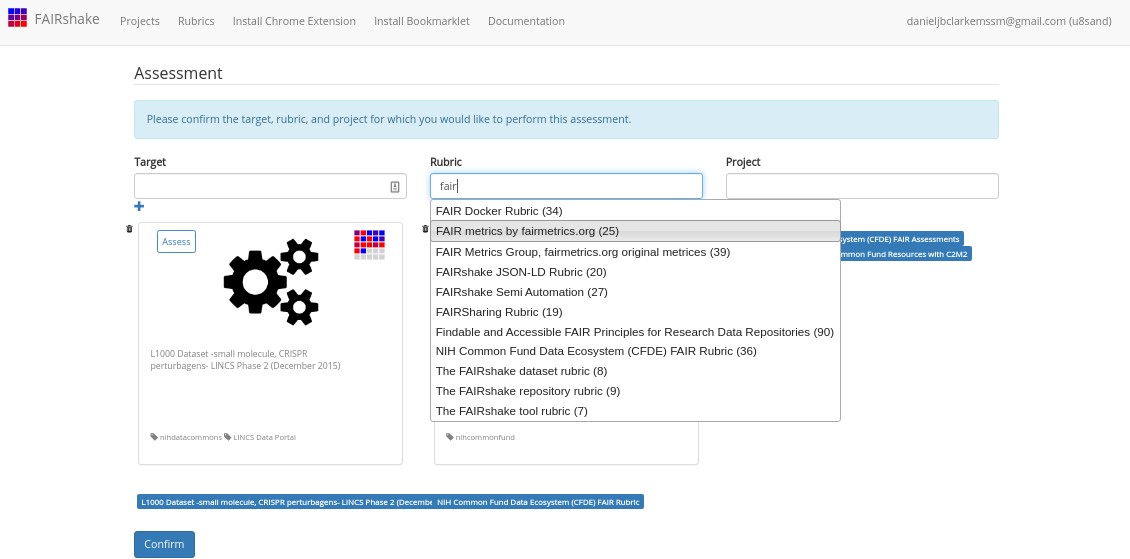

- Ensure that all digital objects in your project have been associated with a rubric.

- Click on the Assess button in the top left corner of a registered digital object's icon, either from the project page or from the digital object page.

- Select the rubric you would like to use to assess the tool -- it does not need to be the auto-selected rubric -- and confirm that the correct digital object and project (if applicable) are also selected.

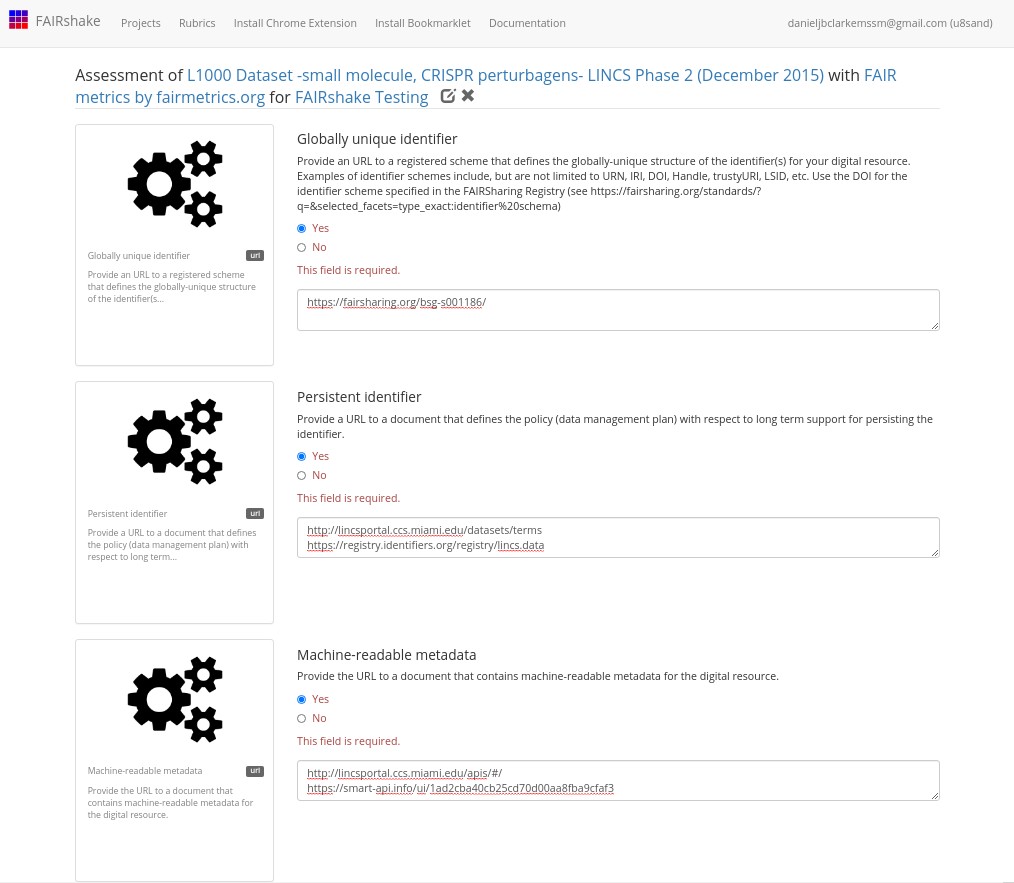

- Begin the manual assessment of the digital object. For each metric, carefully read and consider the prompts, and then respond to the best of your knowledge. Add any comments or URLS as needed.

- Click on the metric card to the left of any prompt to see more detailed explanations about the metric.

- For each metric, you can also examine how other digital objects have scored by clicking the View Assessments button from the metric page.

- Click on the metric card to the left of any prompt to see more detailed explanations about the metric.

- Once finished, you may save, publish, or delete the assessment.

- Publishing the assessment prevents any further changes, as each digital object in a project can be assessed only once with a given rubric. An insignia and analytics will be generated for the object.

- Saving the assessment means the responses, comments, and URLs will not be public, but will be visible to the creators of the tool, project, and assessment.

- Deleting the assessment will remove all data and responses from the assessment.